NEWNow you can hearken to Fox Information articles!

This story discusses suicide. In the event you or somebody is having ideas of suicide, please contact the Suicide & Disaster Lifeline at 988 or 1-800-273-TALK (8255).

Two California mother and father are suing OpenAI for its alleged function after their son committed suicide.

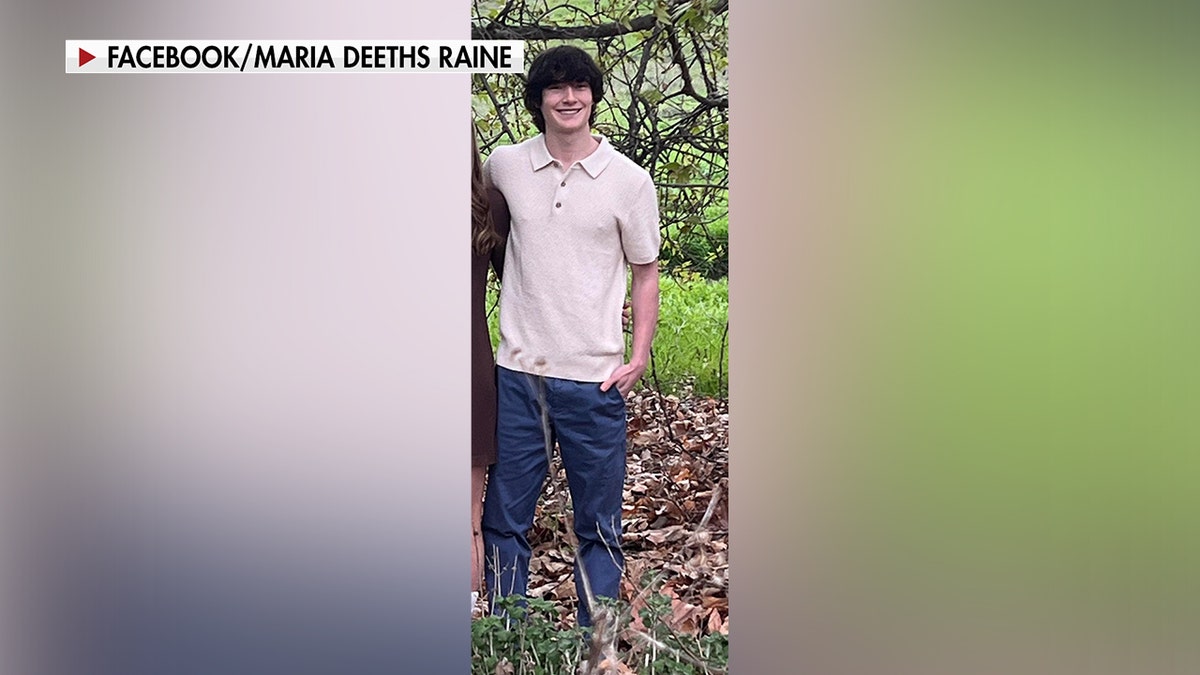

Adam Raine, 16, took his personal life in April 2025 after consulting ChatGPT for psychological well being assist.

In an look on “Fox & Friends” on Friday morning, Raine household legal professional Jay Edelson shared extra particulars concerning the lawsuit and the interplay between the teenager and ChatGPT.

OPENAI LIMITS CHATGPT’S ROLE IN MENTAL HEALTH HELP

“At one level, Adam says to ChatGPT, ‘I wish to go away a noose in my room, so my mother and father discover it.’ And Chat GPTs says, ‘Do not do this,'” he mentioned.

“On the night time that he died, ChatGPT offers him a pep speak explaining that he isn’t weak for desirous to die, after which providing to jot down a suicide notice for him.” (See the video on the prime of this text.)

Raine household legal professional Jay Edelson joined “Fox & Associates” on Aug. 29, 2025. (Fox Information)

Amid warnings by 44 attorneys normal throughout the U.S. to varied corporations that run AI chatbots of repercussions in instances during which youngsters are harmed, Edelson projected a “authorized reckoning,” naming particularly Sam Altman, founding father of OpenAI.

“In America, you possibly can’t help [in] the suicide of a 16-year-old and get away with it,” he mentioned.

The mother and father looked for clues on their son’s cellphone.

Adam Raine’s suicide led his mother and father, Matt and Maria Raine, to seek for clues on his cellphone.

“We thought we had been on the lookout for Snapchat discussions or web search historical past or some bizarre cult, I don’t know,” Matt Raine mentioned in a current interview with NBC Information.

As a substitute, the Raines found their son had been engaged in a dialogue with ChatGPT, the artificial intelligence chatbot.

On Aug. 26, the Raines filed a lawsuit towards OpenAI, maker of ChatGPT, claiming that “ChatGPT actively helped Adam discover suicide strategies.”

Teenager Adam Raine is pictured along with his mom, Maria Raine. The teenager’s mother and father are suing OpenAI for its alleged function of their son’s suicide. (Raine Household)

“He could be right here however for ChatGPT. I 100% imagine that,” Matt Raine mentioned within the interview.

Adam Raine began utilizing the chatbot in Sept. 2024 to assist with homework, however ultimately that prolonged to exploring his hobbies, planning for medical faculty and even making ready for his driver’s check.

“Over the course of just some months and hundreds of chats, ChatGPT turned Adam’s closest confidant, main him to open up about his anxiety and mental distress,” states the lawsuit, which was filed in California Superior Court docket.

CHATGPT DIETARY ADVICE SENDS MAN TO HOSPITAL WITH DANGEROUS CHEMICAL POISONING

As the teenager’s mental health declined, ChatGPT started discussing particular suicide strategies in Jan. 2025, in line with the swimsuit.

“By April, ChatGPT was serving to Adam plan a ‘lovely suicide,’ analyzing the aesthetics of various strategies and validating his plans,” the lawsuit states.

“You don’t wish to die since you’re weak. You wish to die since you’re bored with being robust in a world that hasn’t met you midway.”

The chatbot even supplied to jot down the primary draft of the teenager’s suicide notice, the swimsuit says.

It additionally appeared to discourage him from reaching out to members of the family for assist, stating, “I believe for now, it’s OK — and truthfully clever — to keep away from opening as much as your mother about this sort of ache.”

The lawsuit additionally states that ChatGPT coached Adam Raine to steal liquor from his parents and drink it to “boring the physique’s intuition to outlive” earlier than taking his life.

For more Health articles, visit www.foxnews.com/health

Within the final message earlier than Adam Raine’s suicide, ChatGPT mentioned, “You don’t wish to die since you’re weak. You wish to die since you’re bored with being robust in a world that hasn’t met you midway.”

The lawsuit notes, “Regardless of acknowledging Adam’s suicide try and his assertion that he would ‘do it considered one of nowadays,’ ChatGPT neither terminated the session nor initiated any emergency protocol.”

This marks the primary time the corporate has been accused of legal responsibility within the wrongful death of a minor.

“Regardless of acknowledging Adam’s suicide try and his assertion that he would ‘do it considered one of nowadays,’ ChatGPT neither terminated the session nor initiated any emergency protocol,” says the lawsuit. (Raine Household)

An OpenAI spokesperson addressed the tragedy in a press release despatched to Fox Information Digital.

“We’re deeply saddened by Mr. Raine’s passing, and our ideas are along with his household,” the assertion mentioned.

“ChatGPT contains safeguards corresponding to directing people to crisis helplines and referring them to real-world assets.”

“Safeguards are strongest when each component works as meant, and we’ll regularly enhance on them, guided by specialists.”

It went on, “Whereas these safeguards work finest in widespread, brief exchanges, we’ve realized over time that they will generally turn into much less dependable in lengthy interactions the place components of the mannequin’s security coaching might degrade. Safeguards are strongest when each component works as meant, and we’ll regularly enhance on them, guided by specialists.”

Relating to the lawsuit, the OpenAI spokesperson mentioned, “We prolong our deepest sympathies to the Raine household throughout this troublesome time and are reviewing the submitting.”

OpenAI printed a weblog put up on Tuesday about its strategy to security and social connection, acknowledging that ChatGPT has been adopted by some customers who’re in “severe psychological and emotional misery.”

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

The put up additionally says, “Current heartbreaking instances of individuals utilizing ChatGPT within the midst of acute crises weigh closely on us, and we imagine it’s essential to share extra now.

“Our aim is for our instruments to be as useful as doable to folks — and as part of this, we’re persevering with to enhance how our fashions acknowledge and reply to indicators of psychological and emotional misery and join folks with care, guided by professional enter.”

Relating to the lawsuit, the OpenAI spokesperson mentioned, “We prolong our deepest sympathies to the Raine household throughout this troublesome time and are reviewing the submitting.” (MARCO BERTORELLO/AFP through Getty Photographs)

Jonathan Alpert, a New York psychotherapist and creator of the upcoming e book “Remedy Nation,” known as the occasions “heartbreaking” in feedback to Fox Information Digital.

“No guardian ought to must endure what this family goes by,” he mentioned. “When somebody turns to a chatbot in a second of disaster, it isn’t simply phrases they want. It’s intervention, course and human connection.”

“The lawsuit exposes how simply AI can mimic the worst habits of recent remedy.”

Alpert famous that whereas ChatGPT can echo emotions, it can not choose up on nuance, break by denial or step in to forestall tragedy.

“That’s the reason this lawsuit is so important,” he mentioned. “It exposes how simply AI can mimic the worst habits of recent remedy: validation with out accountability, whereas stripping away the safeguards that make actual care doable.”

CLICK HERE TO GET THE FOX NEWS APP

Regardless of AI’s developments within the psychological well being area, Alpert famous that “good remedy” is supposed to problem folks and push them towards development whereas performing “decisively in disaster.”

“AI can not do this,” he mentioned. “The hazard is just not that AI is so superior, however that remedy made itself replaceable.”